VideoRun2D

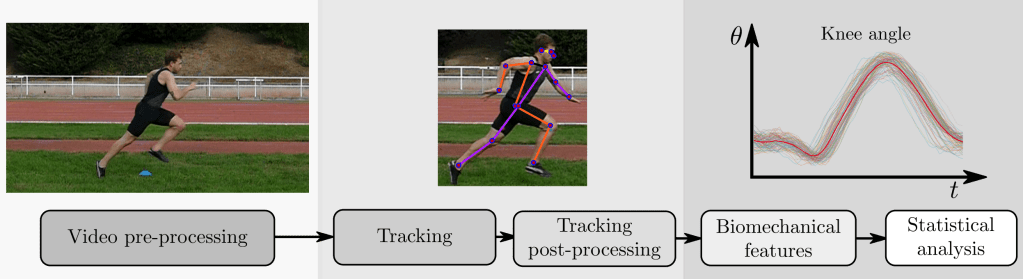

VideoRun2D is a new system for analysing sprinting biomechanics using deep learning. VideoRun2D tracks the body without markers and estimates joint angles during a sprint. The VideoRun2D system has five processing modules: video pre-processing, tracking of the articular points, tracking post-processing, biomechanical features generation, and a validation system that employs statistical analysis.

This system was first described in a technical report and published at the IAPR Intl. Conf. on Pattern Recognition (ICPR), Kolkata, India, December 2024. (2nd Workshop on Facial and Body Expressions, FBE) [PDF].

An extension to the technical report, which includes four new tracker analyses. This was published at the 19th IEEE International Conference on Automatic Face and Gesture Recognition, Clearwater, Florida, May 2025. (3rd Workshop on learning with few or no annotated face, body and gesture data, LFA-FG2025) [PDF].

Predicted trajectories example

Proposed System

Our proposed VideoRun2D performs markerless body tracking and estimates the joint angles of each user during a sprint. To achieve effective estimation, the VideoRun2D system comprises five processing modules: video pre-processing, tracking of the articular points, tracking post-processing, biomechanical features generation, and a validation system that employs statistical analysis.